The Missing Checklist for Application Server Decommissioning

Last Wednesday I checked my inbox and came across this message.

It was from one of my readers:

“Hi Adrian, I’m looking for a project work plan for a data warehousing project for the ___ ORGANIZATION ___ and need some guidance and document. Thank you”

Quite a broad request.

So I asked the guy for details.

The guy had come across my project playbooks, and was looking for a project plan for a data warehouse project.

“Can you give me more details?”

His answer:

“It’s a project for data warehousing a legacy system. The front-end application is scheduled to be decommissioned on January 31st, 2023. Post decommissioning, users must be enabled to retroactively edit data in either the legacy system or the new system.”

A decommissioning of a system! How interesting. Now we are getting somewhere!

A few more emails went back and forth.

I wanted to understand how I could best assist the dear follower.

Then it became clear he was basically struggling with getting started.

The system shutdown date had already been scheduled, and the IT guy had only 4 weeks left

Essentially, the guy had to

(1) Make a plan

(2) Perform the decommissioning procedure in a safe way

Quite a challenge, right?

Then I started reflecting on my professional stages to see what project I had managed that was similar to this one. Having worked in an enterprise IT environment for over 10 years, I had been part of quite a few projects. But a system decommissioning …. wait!

In the shower, it hit me.

One of my projects was rolling out an Enterprise Content Management platform, which included a data migration from an existing “soon-to-break” archiving system including subsequent decommissioning.

The decommissiong involved extracting these documents from the legacy system:

- 25 million customer invoices

- 15 million sales order confirmations

- Around 6 million purchasing documents

- A few other document types with around half a million files

- All documents were stored in PDF format

- The legacy system was running on a Windows server and used an Oracle database for storing its data, so it was fairly easy for my system admin to backup the database.

As you can guess, these were important documents! If my company was getting audited by the tax authority, we had to show the original invoice for a particular sales order as a proof that this was a valid transaction. You can’t simply re-print the invoice if you cannot find it in your archive, because this would not be the original one. We couldn’t afford to lose a single document as part of the decommissioning!

Anyway, I digress the more I think more about this project, it was quite emotional.

Overall, the data takeover went pretty smoothly, but it took longer than we had estimated.

Here is the technical process we used:

- We used three scripts to handle the data extraction and migration to the ECM solution

- Script #1 would extract the PDF file and the corresponding metadata from the source system.

- The script created one ZIP file for each document record, with each ZIP file containing a text file with the metadata like filename, creation date, document type and the actual PDF.

- The ZIP file was placed into a transfer folder from where another script #2 would pick it up and extract the content into an import folder of the ECM solution.

- Script #3 ran inside the ECM solution and its job was to correctly import the PDFs and the metadata into the ECM platform.

The key challenge of the data takeover was to ensure that each PDF was properly tagged so we would later know what type of document it was and which organizational entity it belonged to. We also had to ensure all metadata was migrated correctly. Otherwise it would later become difficult to find the right PDF based on, let’s say, the invoice number.

We did the data takeover take longer than expected? And what were our learnings?

- Remember we were dealing with tens of millions of documents that had to be backed up.

- The legacy system also was quite old, and a lot of administrators had put their hands on it, often not really knowing what they were doing. As a result, the system configuration wasn’t always consistent or complete, which caused problems in the data extraction.

- Never having had a real system owner and with no defined usage guidelines, the (legacy) system had basically been “abused” by the end users from Finance, Sales and Purchasing (they were not to blame, of course).

- People had uploaded all kinds of file formats to the old archive — anything from Word files, TIFF files, GIFS and JPEGs to Excel filees, and not just PDFs. This meant we had to figure out how to convert those non-PDF files into PDFs.

- File naming was another issue. Document files in the legacy system did not follow any kind of naming convention (the system didn’t enforce any). However, for the target system we had established clear file naming standards, like customer_invoice_<org>_<invoice-no>. My developer had to build another script for the renaming of files. Some files even had to be renamed manually, because there was no identifying data in the filename.

- The other challenge for the file takeover was to find the optimal batch size. We migrated the historical data in batches (x number of files per run), and it took us a few attempts to find the idead batch size for our setup. In the morning, I would check the status of the batch run and see if the files had been transferred correctly or if any manual intervention was required.

These were the main challenges we faced in the data takeover and server decommissioning.

Fortunately, it all went well and we were able to shut down our old archiving solution as planned and terminate the contract.

As you can see, a server/system decommissioning can become quite complex.

It’s usually more complex than we assume.

Based on what I have learned from this project, and from people having done similar projects, here are some tips for you if you’re preparing for a system decommissioning.

Tips for your decommissioning project

Determining the data export procedure

The biggest challenge in a decommissioning project: how do you get the data out of the system? Herein also lies the biggest risk for the project.

The data export may not be as simple as downloading the table content with a few SQL commands. The legacy application may use a proprietary file or the database content may be scrambled/encrypted in some way.

If that’s the case, you have to reach out to the system vendor and see if there is an export tool available. If not, you either need the system specs to build your own export tool or pay the vendor to build one for you. Practical insight: Software vendors become surprisingly difficult to work with (and highly unresponsive) when they get wind of your plan to cancel their system.

Need to upload the legacy data to a data warehouse? Make it a separate project!

In most cases, there is a need to preserve the legacy system data for legal reasons. If the goal is not just to “park” the data somewhere in a vault, but to actually make the data accessible to users via a data warehouse, this can become a large project which goes far beyond a pure decommission.

If you are in that situation, it may be wise to split the project into two separate projects.

Project 1 handles the export of the historical records and loads them onto some interim storage solution (ideally using an open source file format like JSON or CSV). Project 1 also completes the actual decommissioning. Project 2 is essentially a data warehousing project. In the project, the target data structure/schema has to be defined as well as any transformation rules and reports required. Ultimately, the legacy system data is loaded into the data warehouse once it is ready. I think this two-stage approach is better because you can set a clear scope for each project and you avoid scope creep.

Now, let’s circle back to the original story.

Remember, one of my followers was asking for a plan for his decommissioning project.

What did I do to help him?

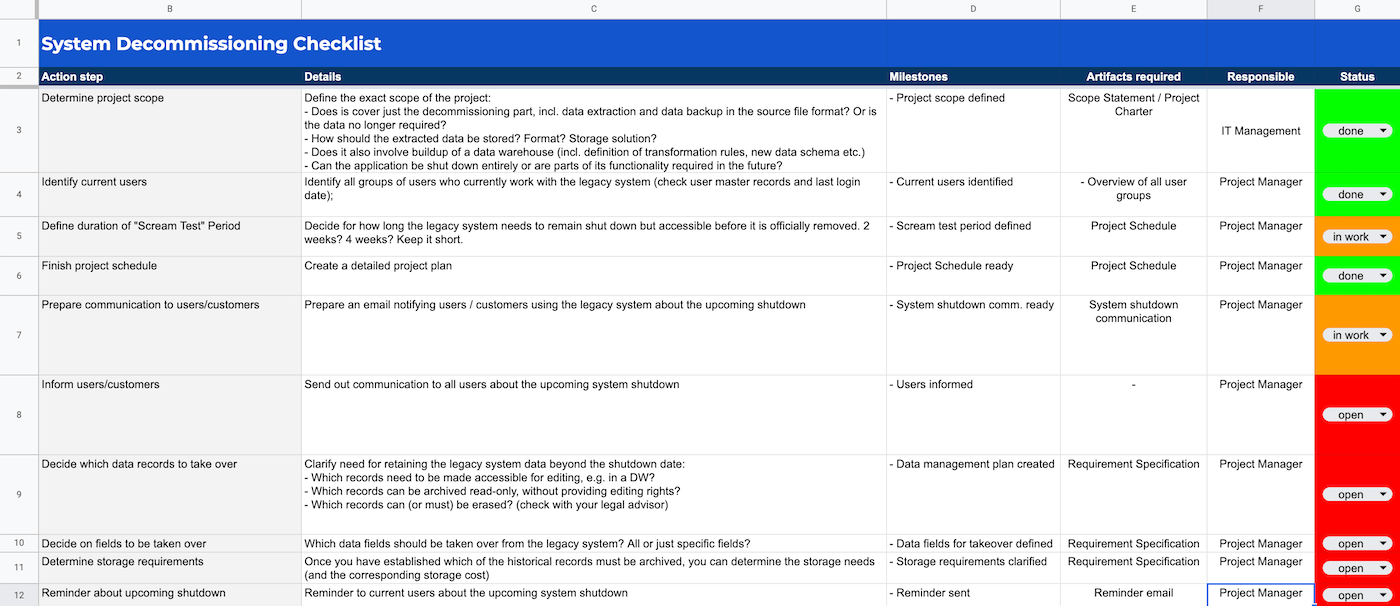

I put together a system decommissioning checklist!

Lots of steps that shouldn’t be forgotten. To make the checklist as comprehensive as possible, I even reached out to some of my IT friends and I collected their suggestions and best practices for a decommissioning project.

And voilà!

Here you can find the checklist:

Introducing ...

The System Decommissioning Checklist

The checklist covers the recommended steps for a typical system decommissioning project.

What does the System Decommissioning Checklist cover?

-

Project Management

Key steps to run your project in a professional way using basic measures from the "Project Management Toolbox". Includes steps like scope definition, risk management, planning and communication

-

Functional Steps

If the system is being used by business/functional users, or if the contained data is of relevance to your functional teams, those teams have to be involved. The checklist lists the key activities and decisions that have to be performed or made in collaboration with the functional side.

-

Technical Steps

The checklist is not tied to a particular technology or vendor. Therefore we cover the technical procedure on a general level: Analyzing the current system, defining the data export procedure, testing the process, performing the actual extraction and backup and finally, shutting down the system.

-

Compliance Good Practices

Certainly, legal and compliance standards vary from organization to organization and country to country, and you definitely want to include a legal or compliance advisor in your project. Still, there are some good "rules" you can follow to ensure the decommissioning is carried out in a transparent manner and with the necessary approvals. An essential step is also to provide the necessary documentation that allows for a later audit.

-

Communication

Communication is a big topic in any decommissioning project. That's why we added communication-related steps to the checklist.

Get the System Decommissioning Checklist

- Necessary prework, technical steps and recommended communication

- Instant download (Excel file)

- Secure payment via FastSpring (US payment provider)

- 30-day money-back guarantee if you are not happy

- Contact form for questions and support

Ask your question here!

About me

Hi, I’m Adrian Neumeyer, founder and CEO of Tactical Project Manager. I have been working as a Senior IT Project Manager for the past ten years. Today my focus is to help people who manage projects — people like you! — by providing practical tips and time-saving tools. Connect with me on LinkedIn.